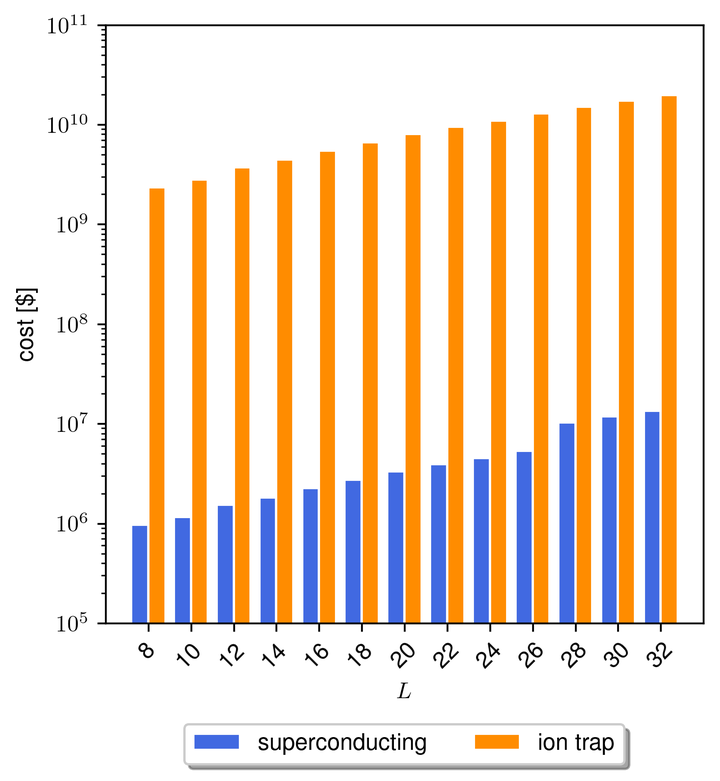

Results of cost analysis for the Hubbard model.

Results of cost analysis for the Hubbard model.

Abstract

We present a simple performance model to estimate the qubit-count and runtime associated with large-scale error-corrected quantum computations. Our estimates extrapolate current usage costs of quantum computers and show that computing the ground state of the 2D Hubbard model, which is widely believed to be an early candidate for practical quantum advantage, could start at a million dollars. Our model shows a clear cost advantage of up to four orders of magnitude for quantum processors based on superconducting technology compared to ion trap devices. Our analysis shows that usage costs, while substantial, will not necessarily block the road to practical quantum advantage. Furthermore, the combined effects of algorithmic improvements, more efficient error correction codes, and R&D cost amortization are likely to lead to orders of magnitude reductions in cost.